wpiman

Getting comfortable

I complete agree with Mike here. You are masking out your image by not having a full zone.

Couple other things...

1. You are triggering A>B. You will only get cars going left to right in your image. If you want both, try A-B. You might even not want to do zone crossing at all to catch more plates. I do A-B to capture motion direction, and I have a cloned camera that just does simple motion so I don't miss any.

2. Your Pre-trigger is set to one image, and post trigger is set to twenty. You might want to set than to something like 10/10 as opposed to 1/20. You might be missing some good quality frames. You are also sending a frame every 100 ms. That is what I do-- and my camera frame rate is set to 10 fps so it matches. I'd recommending setting your camera FR to 10 fps as well. Motion detection seems to be more reliable with a lower frame rate.

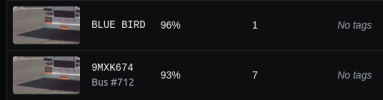

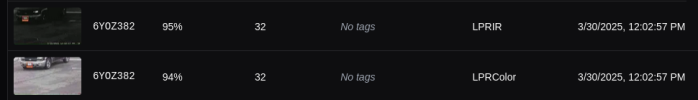

I am not sure how multiple plates in one image will work with BI. I know CP.AI will capture them. And the ALPR database- no idea there either.

Couple other things...

1. You are triggering A>B. You will only get cars going left to right in your image. If you want both, try A-B. You might even not want to do zone crossing at all to catch more plates. I do A-B to capture motion direction, and I have a cloned camera that just does simple motion so I don't miss any.

2. Your Pre-trigger is set to one image, and post trigger is set to twenty. You might want to set than to something like 10/10 as opposed to 1/20. You might be missing some good quality frames. You are also sending a frame every 100 ms. That is what I do-- and my camera frame rate is set to 10 fps so it matches. I'd recommending setting your camera FR to 10 fps as well. Motion detection seems to be more reliable with a lower frame rate.

I am not sure how multiple plates in one image will work with BI. I know CP.AI will capture them. And the ALPR database- no idea there either.