Hi,

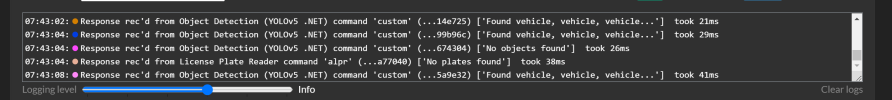

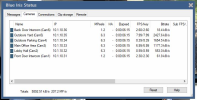

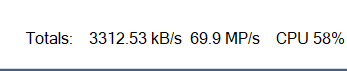

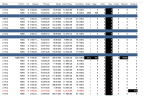

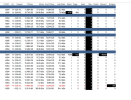

I've attached 3 images of my setup, I have 24 cameras, two 4k, others down to HD/5MP. My aim was to have the camera send onvif motion detection to blue iris and then an rtx 3070 to handle the ai detection. The two 4k cameras I want to do motion detection on are triggering via onvif but each time it does my cpu spikes to 100% and some cameras get bottleneck/fps errors which I guess is simply the cpu not being able to keep up. When no cameras are triggered the cpu is running around 30%. Continuous record is on for all cameras, and live view is using sub streams for most. Only reason I can see the cpu would spike is the switch to main stream on triggering, but if I run the camera permenantly on main stream the cpu only goes up 5-10% so I cant see that being the issue either.

Everything is gigabit switches mostly all down a single Cat5E cable, continuous recording is on so I assume main streams are always being recorded and its just the live view im seeing the sub stream.

Any support would be appreciated

I've attached 3 images of my setup, I have 24 cameras, two 4k, others down to HD/5MP. My aim was to have the camera send onvif motion detection to blue iris and then an rtx 3070 to handle the ai detection. The two 4k cameras I want to do motion detection on are triggering via onvif but each time it does my cpu spikes to 100% and some cameras get bottleneck/fps errors which I guess is simply the cpu not being able to keep up. When no cameras are triggered the cpu is running around 30%. Continuous record is on for all cameras, and live view is using sub streams for most. Only reason I can see the cpu would spike is the switch to main stream on triggering, but if I run the camera permenantly on main stream the cpu only goes up 5-10% so I cant see that being the issue either.

Everything is gigabit switches mostly all down a single Cat5E cable, continuous recording is on so I assume main streams are always being recorded and its just the live view im seeing the sub stream.

Any support would be appreciated