I'm posting this thread for anyone considering making the transition from the DeepStack CPU version to the GPU version.

Hopefully this will give you an impression of what you can expect in terms of enhanced performance.

Please note some of my observations are system-specific. My system specs:

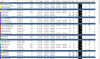

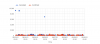

The next 6 screenshots show DeepStack processing times data for 5 cameras over a period of 3 weeks. Two images are shown for each week:

1) Period 1: the week before the P400 was installed.

2) Period 2: the week during which the P400 and DS GPU version were installed (this event took place before midnight on day 2)

3) Period 3: the week after the P400 card was installed.

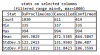

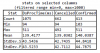

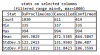

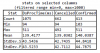

The next 2 images compare the Deepstack processing time statistics for Periods 1 & 3 (7 days on the CPU version vs 7 days on the GPU version). Please note that the statistical analyses excludes the long-duration event 'outliers' and use only the data points between 0 and 1000 msec. This approach was taken to provide a 'cleaner', more meaningful comparison when the system is running 'normally' (not stressed).

This last screenshot is a mark-up of the expanded scale data for Period 2.

Observations:

Hopefully this will give you an impression of what you can expect in terms of enhanced performance.

Please note some of my observations are system-specific. My system specs:

- I7-4770 processor

- 16 GB RAM

- 500 GB SSD

- 8 TB Purple hard drive

- PNY NVIDIA Quadro P400 V2

- Headless

- 9 x 2MP cameras, all continuously dual streaming

- 5/9 cameras using DeepStack (all Dahua and triggered via ONVIF using IVS tripwires)

- EDIT: DeepStack default object detection only - no face detection, no custom models

- This server is used only for Blue Iris AND a PHP server (used only for home automation)

The next 6 screenshots show DeepStack processing times data for 5 cameras over a period of 3 weeks. Two images are shown for each week:

- the 1st (left) shows all the data (full-scale);

- the 2nd (right) shows a subset of the data on an expanded scale (0 to 600 msec).

1) Period 1: the week before the P400 was installed.

2) Period 2: the week during which the P400 and DS GPU version were installed (this event took place before midnight on day 2)

3) Period 3: the week after the P400 card was installed.

The next 2 images compare the Deepstack processing time statistics for Periods 1 & 3 (7 days on the CPU version vs 7 days on the GPU version). Please note that the statistical analyses excludes the long-duration event 'outliers' and use only the data points between 0 and 1000 msec. This approach was taken to provide a 'cleaner', more meaningful comparison when the system is running 'normally' (not stressed).

This last screenshot is a mark-up of the expanded scale data for Period 2.

Observations:

- Overall: as expected, the GPU version yields much faster and less noisy results... and the frequency and severity of very long events is greatly reduced.

- The worst processing times for the GPU version are rarely slower than the best processing times for the CPU version.

- Statistics: The GPU version is ~3.4X faster (139 msec mean vs 469 msec) and ~2.8X less disperse (43 msec stdev vs 122 msec).

Note also that both Periods 1 & 3 contain a similar number of events (1030 vs 1075) and a similar ratio of confirmed: total events (0.41 vs 0.38). The later is consistent with the motion detection schemes being unchanged over the 3 week duration of this experiment.

- DeepStack 'Confirmed' events have the same statistics as 'Cancelled' events, regardless of the version. I'm not sure I expected this for the CPU version, but the data is convincing.

- Using the settings 'Use main stream if available' and 'Save DeepStack analysis details' increased the GPU version processing time by roughly 20%. (Please note that I conducted this experiment for a little over a day only, and I have not yet performed an independent evaluation of the two settings, so one of them may be dominating the apparent difference. If so, my bet is on the former.)

Last edited: