Newbie here, but I've googled for hours but have not found much success.

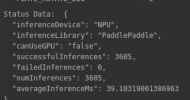

I currently run BI and CPAI on the same server, and it works ok. The server does not have a GPU and it takes 300-700ms for AI to run, and this is on only a few cameras. The server is a 11th gen Intel i5, 16G of ram. For comparison, I run Frigate on Linux with a Coral TPU and inference times are sub 10ms, on the same server, so I'm looking for a way to speed things up w/o a GPU.

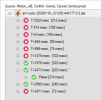

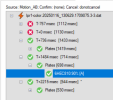

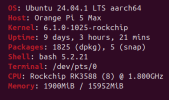

I want to split the CPAI workload off to an OrangePI 5 Max to see if performance would improve.. but I can't figure out how to get the RKNN modules to install since they are currently listed as "Not Available" on the CPAI server dashboard.

The Orange Pi runs the official Ubuntu distribution from Orange Pi (22.04.5 LTS, 6.1.43 kernel), I am running CPAI in Docker. CPAI is 2.9.7. I am running the container in privileged mode, with /dev/bus/usb passed thru as devices. Within the container, I can see those devices, and Docker confirms the container is privileged. I am running the amr64 image.

my docker compose file:

services:

CodeProjectAI:

image: codeproject/ai-server:arm64

container_name: codeproject-ai-server-arm64

privileged: True

hostname: codeproject-ai-server

restart: unless-stopped

ports:

- "5000:32168"

environment:

- TZ=America/Los_Angeles

volumes:

- /dev/bus/usb:/dev/bus/usb

- codeproject_ai_data:/etc/codeproject/ai

- codeproject_ai_modules:/app/modules

devices:

- /dev/bus/usb:/dev/bus/usb

volumes:

codeproject_ai_data:

codeproject_ai_modules:

If you have CPAI running on RK3588, would love some advise on how to get this going.

Thanks!

I currently run BI and CPAI on the same server, and it works ok. The server does not have a GPU and it takes 300-700ms for AI to run, and this is on only a few cameras. The server is a 11th gen Intel i5, 16G of ram. For comparison, I run Frigate on Linux with a Coral TPU and inference times are sub 10ms, on the same server, so I'm looking for a way to speed things up w/o a GPU.

I want to split the CPAI workload off to an OrangePI 5 Max to see if performance would improve.. but I can't figure out how to get the RKNN modules to install since they are currently listed as "Not Available" on the CPAI server dashboard.

The Orange Pi runs the official Ubuntu distribution from Orange Pi (22.04.5 LTS, 6.1.43 kernel), I am running CPAI in Docker. CPAI is 2.9.7. I am running the container in privileged mode, with /dev/bus/usb passed thru as devices. Within the container, I can see those devices, and Docker confirms the container is privileged. I am running the amr64 image.

my docker compose file:

services:

CodeProjectAI:

image: codeproject/ai-server:arm64

container_name: codeproject-ai-server-arm64

privileged: True

hostname: codeproject-ai-server

restart: unless-stopped

ports:

- "5000:32168"

environment:

- TZ=America/Los_Angeles

volumes:

- /dev/bus/usb:/dev/bus/usb

- codeproject_ai_data:/etc/codeproject/ai

- codeproject_ai_modules:/app/modules

devices:

- /dev/bus/usb:/dev/bus/usb

volumes:

codeproject_ai_data:

codeproject_ai_modules:

If you have CPAI running on RK3588, would love some advise on how to get this going.

Thanks!

Just wish the font color for Portainer was different because I am color blind and it was hard to read (too close to the white background for me).

Just wish the font color for Portainer was different because I am color blind and it was hard to read (too close to the white background for me).