Should the License Plate Reader work with non-CUDA GPU, like the YOLOV5 .NET version works with DirectML? Or would that require a separate build of the License Plate Reader in order to support DirectML?

I ask because I am able to run with the GPU using the YOLOV5 .NET model, but when I try to try to enable the GPU with the alpr module, it shuts down and then restarts with CPU. I will include the short bit of CodeProject logs from the failed GPU attempt. The log shows the lines, "** Module ALPR has shutdown" and "ALPR_adapter.py: has exited", but it doesn't give a reason or error code. At a minimum, does it seem like a reasonable request to CodeProject to include the reason in the log so we know WHY it's not working?

Note that further below in the log my MIN_COMPUTE_CAPABILITY=6 is shown, which was mentioned above as a criteria. Having said that, is there a magic number for the MIN_COMPUTE_CAPABILITY, and is that considered for all modules (e.g. YOLOv5 and ALPR) or ???

Since the bulk of my processing is going through the CPU, and I have plenty of CPU to spare, this doesn't particularly bother me, so I'm mostly just interested in having a better understanding.

View attachment 186963

Code:

2024-02-19 12:14:05: Update ALPR. Setting EnableGPU=true

2024-02-19 12:14:05: *** Restarting License Plate Reader to apply settings change

2024-02-19 12:14:05: Sending shutdown request to python/ALPR

2024-02-19 12:14:05: Client request 'Quit' in queue 'alpr_queue' (#reqid 5cc6168f-8ab5-4ed7-a403-436ccb548fc0)

2024-02-19 12:14:05: Request 'Quit' dequeued from 'alpr_queue' (#reqid 5cc6168f-8ab5-4ed7-a403-436ccb548fc0)

2024-02-19 12:14:05: License Plate Reader: Retrieved alpr_queue command 'Quit' in License Plate Reader

2024-02-19 12:14:17: ALPR_adapter.py: License Plate Reader started.

2024-02-19 12:14:18: ** Module ALPR has shutdown

2024-02-19 12:14:18: ALPR_adapter.py: has exited

2024-02-19 12:14:38: ALPR went quietly

2024-02-19 12:14:38: Running module using: C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\Scripts\python

2024-02-19 12:14:38:

2024-02-19 12:14:38: Attempting to start ALPR with C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\Scripts\python "C:\Program Files\CodeProject\AI\modules\ALPR\ALPR_adapter.py"

2024-02-19 12:14:38: Starting C:\Program Files...ws\python39\venv\Scripts\python "C:\Program Files...\modules\ALPR\ALPR_adapter.py"

2024-02-19 12:14:38:

2024-02-19 12:14:38: ** Module 'License Plate Reader' 3.0.1 (ID: ALPR)

2024-02-19 12:14:38: ** Valid: True

2024-02-19 12:14:38: ** Module Path: <root>\modules\ALPR

2024-02-19 12:14:38: ** AutoStart: True

2024-02-19 12:14:38: ** Queue: alpr_queue

2024-02-19 12:14:38: ** Runtime: python3.9

2024-02-19 12:14:38: ** Runtime Loc: Local

2024-02-19 12:14:38: ** FilePath: ALPR_adapter.py

2024-02-19 12:14:38: ** Pre installed: False

2024-02-19 12:14:38: ** Start pause: 3 sec

2024-02-19 12:14:38: ** Parallelism: 0

2024-02-19 12:14:38: ** LogVerbosity:

2024-02-19 12:14:38: ** Platforms: all

2024-02-19 12:14:38: ** GPU Libraries: installed if available

2024-02-19 12:14:38: ** GPU Enabled: enabled

2024-02-19 12:14:38: ** Accelerator:

2024-02-19 12:14:38: ** Half Precis.: enable

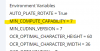

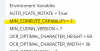

2024-02-19 12:14:38: ** Environment Variables

2024-02-19 12:14:38: ** AUTO_PLATE_ROTATE = True

2024-02-19 12:14:38: ** MIN_COMPUTE_CAPABILITY = 6

2024-02-19 12:14:38: ** MIN_CUDNN_VERSION = 7

2024-02-19 12:14:38: ** OCR_OPTIMAL_CHARACTER_HEIGHT = 60

2024-02-19 12:14:38: ** OCR_OPTIMAL_CHARACTER_WIDTH = 30

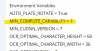

2024-02-19 12:14:38: ** OCR_OPTIMIZATION = True

2024-02-19 12:14:38: ** PLATE_CONFIDENCE = 0.7

2024-02-19 12:14:38: ** PLATE_RESCALE_FACTOR = 2

2024-02-19 12:14:38: ** PLATE_ROTATE_DEG = 0

2024-02-19 12:14:38:

2024-02-19 12:14:38: Started License Plate Reader module

2024-02-19 12:14:42: ALPR_adapter.py: Running init for License Plate Reader