CodeProject.AI Version 2.5

- Thread starter MikeLud1

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Are the custom models included with CodeProject v2.6.2 YOLOv5 6.2 or do I need to download Mike Ludd's IPCam models manually?

I reinstalled the module several times and there are no included models?

Interestingly, YOLOv5 3.1 does have included models - is 6.2 broken?

I reinstalled the module several times and there are no included models?

Interestingly, YOLOv5 3.1 does have included models - is 6.2 broken?

mwilky

n3wb

Is there anyway to install an older version of module? Just updated to coral 2.3.1 and having all sorts of issues, need to install the previous one until i have time to debug

Is there a way to tell exactly what cam was triggered or sent in each line of the log ? Just wondering how to tweak some settings on slower response times but wasn't sure which cam to change some setting on in BI. I have been trying to go by the time, but it can be very tedious and not sure which cam exactly? What do these numbers represent as well?

I was just going to send the configuration file with AutoStart changed to "true". There is a bug that is not saving any setting from the default settings.

For some reason every time I edit the C:\Program Files\CodeProject\AI\modules\ObjectDetectionYOLOv5Net\modulesettings.json file to "True" Upon restart CPAI shows that the module is no longer installed? Am I editing the wrong file?

Update: I found that this file was missing from "C:\ProgramData\CodeProject\AI\modulesettings.json" This was the reason it would not save any of my start options on my module. I have done 2 clean installs on 2 different computers of 2.6.5 and neither would have this file. Hopefully this gets fixed in next update.

Last edited:

That maxes out the clock speeds and memory speeds and adds heat and energy consumption. P-states is what you want to change. Nvidia has P0-P15 states. P0 being the fastest. I run at P5 (810,810) which is basically idle watts and detection times within 5 to 10 ms of the card running full throttle. Using nvidia-smi to change speeds and memory is more efficient in my testing. If anyone wants the commands I’ll post them.

Thanks for this info. I tried the P5 (810,810) and it helped a lot, but then tried P3 (1620,1620) and its really working great now.

hopalong

Getting the hang of it

I posted this in the ALPR thread but might get a better response here.

I just installed a Tesla P4 8GB in my unraid server for CP.AI. Pulled this tag codeproject/ai-server:cuda12_2-2.6.5 . A couple questions.

Is this the appropiate tag for using a Nvidia GPU? Which version of Yolo should I be using with this specific GPU? Is ALPR able to use this GPU?

When ever I try to enable GPU for the LPR it keeps going back to CPU.

View attachment 199785

Here is the LPR Info where it shows GPU libraries are not installed:

Here is the system info:

Also here is an error I just noticed that has popped up a few times:

I just installed a Tesla P4 8GB in my unraid server for CP.AI. Pulled this tag codeproject/ai-server:cuda12_2-2.6.5 . A couple questions.

Is this the appropiate tag for using a Nvidia GPU? Which version of Yolo should I be using with this specific GPU? Is ALPR able to use this GPU?

When ever I try to enable GPU for the LPR it keeps going back to CPU.

View attachment 199785

Here is the LPR Info where it shows GPU libraries are not installed:

Code:

Module 'License Plate Reader' 3.2.2 (ID: ALPR)

Valid: True

Module Path: <root>/modules/ALPR

Module Location: Internal

AutoStart: True

Queue: alpr_queue

Runtime: python3.8

Runtime Location: Local

FilePath: ALPR_adapter.py

Start pause: 3 sec

Parallelism: 0

LogVerbosity:

Platforms: all,!windows-arm64

GPU Libraries: not installed

GPU: use if supported

Accelerator:

Half Precision: enable

Environment Variables

AUTO_PLATE_ROTATE = True

CROPPED_PLATE_DIR = <root>/Server/wwwroot

MIN_COMPUTE_CAPABILITY = 6

MIN_CUDNN_VERSION = 7

OCR_OPTIMAL_CHARACTER_HEIGHT = 60

OCR_OPTIMAL_CHARACTER_WIDTH = 30

OCR_OPTIMIZATION = True

PLATE_CONFIDENCE = 0.7

PLATE_RESCALE_FACTOR = 2

PLATE_ROTATE_DEG = 0

REMOVE_SPACES = False

ROOT_PATH = <root>

SAVE_CROPPED_PLATE = False

Status Data: {

"inferenceDevice": "CPU",

"inferenceLibrary": "",

"canUseGPU": "false",

"successfulInferences": 19,

"failedInferences": 0,

"numInferences": 19,

"averageInferenceMs": 138.0

}

Started: 28 Jul 2024 12:52:06 PM Pacific Standard Time

LastSeen: 28 Jul 2024 12:52:37 PM Pacific Standard Time

Status: Started

Requests: 16112 (includes status calls)Here is the system info:

Code:

Server version: 2.6.5

System: Docker (b942b79acfaf)

Operating System: Linux (Ubuntu 22.04)

CPUs: Intel(R) Xeon(R) CPU E3-1230 V2 @ 3.30GHz (Intel)

1 CPU x 4 cores. 8 logical processors (x64)

GPU (Primary): Tesla P4 (8 GiB) (NVIDIA)

Driver: 550.40.07, CUDA: 11.5.119 (up to: 12.4), Compute: 6.1, cuDNN: 8.9.6

System RAM: 16 GiB

Platform: Linux

BuildConfig: Release

Execution Env: Docker

Runtime Env: Production

Runtimes installed:

.NET runtime: 7.0.19

.NET SDK: Not found

Default Python: 3.10.12

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

System GPU info:

GPU 3D Usage 13%

GPU RAM Usage 1.3 GiB

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168Also here is an error I just noticed that has popped up a few times:

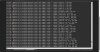

Code:

15:00:37:Object Detection (YOLOv5 6.2): [RuntimeError] : Traceback (most recent call last):

File "/app/preinstalled-modules/ObjectDetectionYOLOv5-6.2/detect.py", line 141, in do_detection

det = detector(img, size=640)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1190, in _call_impl

return forward_call(*input, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/yolov5/models/common.py", line 705, in forward

y = self.model(x, augment=augment) # forward

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1190, in _call_impl

return forward_call(*input, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/yolov5/models/common.py", line 515, in forward

y = self.model(im, augment=augment, visualize=visualize) if augment or visualize else self.model(im)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1190, in _call_impl

return forward_call(*input, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/yolov5/models/yolo.py", line 209, in forward

return self._forward_once(x, profile, visualize) # single-scale inference, train

File "/usr/local/lib/python3.8/dist-packages/yolov5/models/yolo.py", line 121, in _forward_once

x = m(x) # run

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1190, in _call_impl

return forward_call(*input, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/yolov5/models/yolo.py", line 75, in forward

wh = (wh * 2) ** 2 * self.anchor_grid[i] # wh

RuntimeError: The size of tensor a (48) must match the size of tensor b (36) at non-singleton dimension 2

Last edited:

AlwaysSomething

Pulling my weight

There is another thread for Coral AI problem (still CPAI but different module) but we are are having a similar issue where it keeps reverting to the CPU so maybe the root cause is the same thing regardless of module. I see you are using Linux but I am using Windows. So again maybe it's the same piece of underlying code.

I also noticed that if I reboot the PC the module is not starting automatically (even thought it was started when I rebooted).

Another thing I noticed is it not saving the Model or Model Size I am choosing when I reboot.

I've uninstalled, deleted the folders, and reinstalled at least a dozen times to the same outcome.

I'm going to try and revert to 2.6.2 since that was more stable for me. 2.5.1 was the most stable version for me but unfortunately it won't install correctly since they updated the Installer scripts. I only upgraded since the newer version enabled multiple TPUs.

I only upgraded since the newer version enabled multiple TPUs.

I also noticed that if I reboot the PC the module is not starting automatically (even thought it was started when I rebooted).

Another thing I noticed is it not saving the Model or Model Size I am choosing when I reboot.

I've uninstalled, deleted the folders, and reinstalled at least a dozen times to the same outcome.

I'm going to try and revert to 2.6.2 since that was more stable for me. 2.5.1 was the most stable version for me but unfortunately it won't install correctly since they updated the Installer scripts.

I only upgraded since the newer version enabled multiple TPUs.

I only upgraded since the newer version enabled multiple TPUs.There is another thread for Coral AI problem (still CPAI but different module) but we are are having a similar issue where it keeps reverting to the CPU so maybe the root cause is the same thing regardless of module. I see you are using Linux but I am using Windows. So again maybe it's the same piece of underlying code.

I also noticed that if I reboot the PC the module is not starting automatically (even thought it was started when I rebooted).

Another thing I noticed is it not saving the Model or Model Size I am choosing when I reboot.

I've uninstalled, deleted the folders, and reinstalled at least a dozen times to the same outcome.

I'm going to try and revert to 2.6.2 since that was more stable for me. 2.5.1 was the most stable version for me but unfortunately it won't install correctly since they updated the Installer scripts.I only upgraded since the newer version enabled multiple TPUs.

I also was having the issue with it not saving or starting after reboot. Mine was caused by this file missing: "C:\ProgramData\CodeProject\AI\modulesettings.json" I got a copy of the file from my brother who was using a earlier version on the Yolov5.net but I just edited it and mine works fine now and saves my config again.

AlwaysSomething

Pulling my weight

I have a SFF (small form factor) PC that an only take low profile (half-height) PCIE cards. Can anyone recommend a low power GPU that would fit and still be suitable for CPAI? Currently just need for Object Detection and probably LPR in the near future.

I already have Coral TPUs (PCIe single and duals) right now but CPAI is not mature enough for them yet (IMO) and can't keep spending time troubleshooting/upkeeping. Also,there still isn't support for custom models with TPU and no LPR. Sad because I bought a few TPUs with high hopes when CPAI version 2.5.1 was running good but only single TPU.

I already have Coral TPUs (PCIe single and duals) right now but CPAI is not mature enough for them yet (IMO) and can't keep spending time troubleshooting/upkeeping. Also,there still isn't support for custom models with TPU and no LPR. Sad because I bought a few TPUs with high hopes when CPAI version 2.5.1 was running good but only single TPU.

@AlwaysSomething I have Optiplex SFF, I bought this card because it's half height

Good performance for CPAI, including LPR.

Good performance for CPAI, including LPR.

As an Amazon Associate IPCamTalk earns from qualifying purchases.

mwilky

n3wb

There is another thread for Coral AI problem (still CPAI but different module) but we are are having a similar issue where it keeps reverting to the CPU so maybe the root cause is the same thing regardless of module. I see you are using Linux but I am using Windows. So again maybe it's the same piece of underlying code.

I also noticed that if I reboot the PC the module is not starting automatically (even thought it was started when I rebooted).

Another thing I noticed is it not saving the Model or Model Size I am choosing when I reboot.

I've uninstalled, deleted the folders, and reinstalled at least a dozen times to the same outcome.

I'm going to try and revert to 2.6.2 since that was more stable for me. 2.5.1 was the most stable version for me but unfortunately it won't install correctly since they updated the Installer scripts.I only upgraded since the newer version enabled multiple TPUs.

Reverting back to 2.2.2 has fixed all my issues with falling back to CPU. It's been up straight now for weeks and never fallen back once. Might be worth trying it

Thanks for the info. When I went looking for your card I found this for a small amount more, a 4060 with 8Gigs Memory...@AlwaysSomething I have Optiplex SFF, I bought this card because it's half height

Good performance for CPAI, including LPR.

As an Amazon Associate IPCamTalk earns from qualifying purchases.

Vettester

Getting comfortable

- Feb 5, 2017

- 980

- 959

Are you powering this with the original power supply?I have Optiplex SFF, I bought this card because it's half height

@Vettester Yes, I am running this card with original SFF power supply, which also powers 2 3.5" HD and 1 SSD.Are you powering this with the original power supply?

AlwaysSomething

Pulling my weight

@actran @David L Thank you for the recommendations. The only problem with the cards you all mentioned was they take up 2 slots and I only have 2 PCIe slots. Unfortunately, I already have a 2nd NIC card in one of the slots. I should have mentioned that. Sorry  I'll have to think if I want to remove the 2nd NIC and go to using VLANS or something else. Mike Lud made a list a while back for GPU and LPR. I'll have to find that and spend some time going through it.

I'll have to think if I want to remove the 2nd NIC and go to using VLANS or something else. Mike Lud made a list a while back for GPU and LPR. I'll have to find that and spend some time going through it.

@mwilky I tried reverting to 2.5.1 (last version that worked well for me) but got 404 errors on the installer. I have some more installers for prior versions but have to see if they will work. I don't have the one for 2.2.2 so I may ask you for it.

Thanks for hearing me vent. LOL

@mwilky I tried reverting to 2.5.1 (last version that worked well for me) but got 404 errors on the installer. I have some more installers for prior versions but have to see if they will work. I don't have the one for 2.2.2 so I may ask you for it.

I don't have the reverting to CPU problem anymore. I reinstalled the Coral driver which seemed to fix that (for now).

The problem I have is I select the Efficient-Lite model and it says it's using it in the logs but it is really using the MobileNetSSD model. If I go into the CPAI Explorer to test images I can prove it's using MobileNetSSD and not the Efficient-Lite as selected. I posted this months ago as a bug in 2.6.2. Previous to 2.6.2 I had 2.5.1 and the model selector worked but didn't support dual TPU (multiple TPU) or was buggy with it (I can't remember). That was why I upgraded to 2.6.2 when it came out. Otherwise I don't upgrade just to upgrade. I can find that post which shows the steps I used to prove it (keeping this Post TLDR)

The problem I have is I select the Efficient-Lite model and it says it's using it in the logs but it is really using the MobileNetSSD model. If I go into the CPAI Explorer to test images I can prove it's using MobileNetSSD and not the Efficient-Lite as selected. I posted this months ago as a bug in 2.6.2. Previous to 2.6.2 I had 2.5.1 and the model selector worked but didn't support dual TPU (multiple TPU) or was buggy with it (I can't remember). That was why I upgraded to 2.6.2 when it came out. Otherwise I don't upgrade just to upgrade. I can find that post which shows the steps I used to prove it (keeping this Post TLDR)

The biggest problem I have is the default model "MobileNetSSD" is not good (it's OK) at identifying/classifying objects. I honestly think this is what may be turning a lot of people away from the Coral TPU. Especially if they are selecting the different models and getting the same results. The inference times for MobileNetSSD are quick (30ms) but not accurate. The Efficient-Lite (medium size) is a little longer (100-120ms with single TPU) but was very accurate for me. For example, with the MobileNetSSD every time a car drives by it tags it as a person. So if I'm searching for people I'm getting every car that drives by (which is a 100 times more than people walking by). A few more examples is it tags all vehicles as cars so if I'm searching for Bus or Truck I'm not finding them. It also misses my dog which is close to the camera and perfectly positioned (sideways) for identifying. 2.5.1 was the last version that correctly used the selected model (Efficient-Lite) versus the default "MobileNetSSD".

I looked as some of the dat files to see if it was just trained so that if it sees a car it puts person but it wasn't. For some reason the glares on the windows are what is boxed as the person. Like I said, I spent a LOT of time testing/researching it.

I know I posted it here months ago and discussed it with Seth (CPAI dev) but probably got forgotten. I thought I posted on CPAI site as well but have to see. IMO their site is not as user friendly as IPCAMTALK so I try here first. I don't think I could attach images there either which deterred me since I would have to type a lot more info (a picture is worth a thousand words).

I looked as some of the dat files to see if it was just trained so that if it sees a car it puts person but it wasn't. For some reason the glares on the windows are what is boxed as the person. Like I said, I spent a LOT of time testing/researching it.

I know I posted it here months ago and discussed it with Seth (CPAI dev) but probably got forgotten. I thought I posted on CPAI site as well but have to see. IMO their site is not as user friendly as IPCAMTALK so I try here first. I don't think I could attach images there either which deterred me since I would have to type a lot more info (a picture is worth a thousand words).

Thanks for hearing me vent. LOL

AlwaysSomething

Pulling my weight

@actran @David L Thank you for the recommendations. The only problem with the cards you all mentioned was they take up 2 slots and I only have 2 PCIe slots. Unfortunately, I already have a 2nd NIC card in one of the slots. I should have mentioned that. SorryI'll have to think if I want to remove the 2nd NIC and go to using VLANS or something else. Mike Lud made a list a while back for GPU and LPR. I'll have to find that and spend some time going through it.

@mwilky I tried reverting to 2.5.1 (last version that worked well for me) but got 404 errors on the installer. I have some more installers for prior versions but have to see if they will work. I don't have the one for 2.2.2 so I may ask you for it.

I don't have the reverting to CPU problem anymore. I reinstalled the Coral driver which seemed to fix that (for now).

The problem I have is I select the Efficient-Lite model and it says it's using it in the logs but it is really using the MobileNetSSD model. If I go into the CPAI Explorer to test images I can prove it's using MobileNetSSD and not the Efficient-Lite as selected. I posted this months ago as a bug in 2.6.2. Previous to 2.6.2 I had 2.5.1 and the model selector worked but didn't support dual TPU (multiple TPU) or was buggy with it (I can't remember). That was why I upgraded to 2.6.2 when it came out. Otherwise I don't upgrade just to upgrade. I can find that post which shows the steps I used to prove it (keeping this Post TLDR)

The biggest problem I have is the default model "MobileNetSSD" is not good (it's OK) at identifying/classifying objects. I honestly think this is what may be turning a lot of people away from the Coral TPU. Especially if they are selecting the different models and getting the same results. The inference times for MobileNetSSD are quick (30ms) but not accurate. The Efficient-Lite (medium size) is a little longer (100-120ms with single TPU) but was very accurate for me. For example, with the MobileNetSSD every time a car drives by it tags it as a person. So if I'm searching for people I'm getting every car that drives by (which is a 100 times more than people walking by). A few more examples is it tags all vehicles as cars so if I'm searching for Bus or Truck I'm not finding them. It also misses my dog which is close to the camera and perfectly positioned (sideways) for identifying. 2.5.1 was the last version that correctly used the selected model (Efficient-Lite) versus the default "MobileNetSSD".

I looked as some of the dat files to see if it was just trained so that if it sees a car it puts person but it wasn't. For some reason the glares on the windows are what is boxed as the person. Like I said, I spent a LOT of time testing/researching it.

I know I posted it here months ago and discussed it with Seth (CPAI dev) but probably got forgotten. I thought I posted on CPAI site as well but have to see. IMO their site is not as user friendly as IPCAMTALK so I try here first. I don't think I could attach images there either which deterred me since I would have to type a lot more info (a picture is worth a thousand words).

Thanks for hearing me vent. LOL

Use a USB-ethernet adaptor for the internet NIC - cheaper than a VLAN switch and opens up a PCIe slot.

hopalong

Getting the hang of it

I have a SFF (small form factor) PC that an only take low profile (half-height) PCIE cards. Can anyone recommend a low power GPU that would fit and still be suitable for CPAI? Currently just need for Object Detection and probably LPR in the near future.

I'm using a Tesla P4 8GB though you have to add some type of active cooling on it which takes up room. I bought a 3D printed fan shroud and added a 40mm Noctua. I think there are some alternatives that sit above the heatsink too. Not sure how much space you have.

I'm getting much better Yolo inference times, 17 to 50ms dropped down from 1000+ ms. Plus my CPU is not pegged any more. It uses up to 75W thru the PCIE slot.

Last edited: