MikeLud1

IPCT Contributor

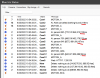

If you are only using custom models you need to uncheck Default object detection.So I've been testing. Thanks for pointing out the weird general/general1 thing. Had me pulling my hair out why it wasn't using the model. Detection seems faster, but . . .

While testing/tuning I still get boxes drawn for suitcase, tv, and potted plant. It indicates that it's using the general1 model, but its clearly still detecting things other than person and vehicle. Not sure what's happening.

Anyway. Thank you Mike for all the work you're putting into the custom models.