Interesting results. animal model picked my furry dog up as a dog. critter as a cat. dark as a cat and combined nothing. I am using combined only at the moment!!

combined

animal

critters

dark

But critter 85% - double the accuracy as the others. My dog does look like a cat tbh

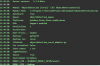

inference speeds not too shabby on a couple of test

combined

animal

critters

dark

But critter 85% - double the accuracy as the others. My dog does look like a cat tbh

inference speeds not too shabby on a couple of test

Attachments

Last edited: