Over allocation will cause high CPU !

1)

Turn on the Intel GPU in the BIOS. The intel drive should load. The windows task manager shoud show two GPUs.

2) use the acceleration on Intel, use the Nvidia for the video.

2) set the iframe on your cameras to twice the framerate.

3) implement substreams.

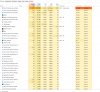

4) fix the allocation problem on the AUX drive. You are over allocated.

What is the make and model numbers of some of your cameras.

=======================================

My Standard allocation post.

1) Do not use time (limit clip age)to determine when BI video files are moved or deleted, only use space. Using time wastes disk space.

2) If New and stored are on the same disk drive do not used stored, set the stored size to zero, set the new folder to delete, not move. All it does is waste CPU time and increase the number of disk writes. You can leave the stored folder on the drive just do not use it.

3)

Never allocate over 90% of the total disk drive to BI.

4) if using continuous recording on the BI camera settings, record tab, set the combine and cut video to 1 hour or 3 GB. Really big files are difficult to transfer.

5) it is recommend to NOT store video on an SSD (the C: drive).

6) Do not run the disk defragmenter on the video storage disk drives.

7) Do not run virus scanners on BI folders

8) an alternate way to allocate space on multiple drives is to assign different cameras to different drives, so there is no file movement between new and stored.

9) Never use an External USB drive for the NEW folder. Never use a network drive for the NEW folder.

Advanced storage:

If you are using a complete disk for large video file storage (BVR) continuous recording, I recommend formatting the disk, with a windows cluster size of 1024K (1 Megabyte). This is a increase from the 4K default. This will reduce the physical number of disk write, decrease the disk fragmentation, speed up access.

Hint:

On the

Blue iris status (lighting bolt graph) clip storage tab, if there is any red on the bars you have a allocation problem. If there is no Green, you have no free space, this is bad.