Also, do we have access to the plate as a variable to be able to pass to an API?

I haven't confirmed for the CPAI LPR, but when using Plate Recognizer, I use &PLATE.

See 5.6.8 - January 8, 2023

Also, do we have access to the plate as a variable to be able to pass to an API?

Mike, where in Tapatalk is this new thread located? The original was under What's New.I started a new thread for all topic CodeProject.AI version 2.0.

CodeProject.AI Version 2.0

CodeProject.AI version 2.0 was released Jan 16, 2023, this thread is for all topic CodeProject.AI version 2.0. https://www.codeproject.com/Articles/5322557/CodeProject-AI-Server-AI-the-easy-way If you need any info on CodeProject.AI version 1.x please refer to the below link (there is some...ipcamtalk.com

Oh, Under AI. Nevermind.Mike, where in Tapatalk is this new thread located? The original was under What's New.

I don't see the new thread yet.

MikeLud1; Did anything ever come of this testing for module(s) for older Nvidia GPUs?One of the new modules will support some of the older Nvidia GPUs. The developer is looking for someone to do some testing, if you have one of the below GPUs and are willing to do some testing let me know.

GeForce Quadro, NVS Tesla/Datacenter GeForce GTX 770, GeForce GTX 760, GeForce GT 740, GeForce GTX 690, GeForce GTX 680, GeForce GTX 670, GeForce GTX 660 Ti, GeForce GTX 660, GeForce GTX 650 Ti BOOST, GeForce GTX 650 Ti, GeForce GTX 650,

GeForce GTX 880M, GeForce GTX 870M, GeForce GTX 780M, GeForce GTX 770M, GeForce GTX 765M, GeForce GTX 760M, GeForce GTX 680MX, GeForce GTX 680M, GeForce GTX 675MX, GeForce GTX 670MX, GeForce GTX 660M, GeForce GT 750M, GeForce GT 650M, GeForce GT 745M, GeForce GT 645M, GeForce GT 740M, GeForce GT 730M, GeForce GT 640M, GeForce GT 640M LE, GeForce GT 735M, GeForce GT 730M

GeForce GTX Titan Z, GeForce GTX Titan Black, GeForce GTX Titan, GeForce GTX 780 Ti, GeForce GTX 780, GeForce GT 640 (GDDR5), GeForce GT 630 v2, GeForce GT 730, GeForce GT 720, GeForce GT 710, GeForce GT 740M (64-bit, DDR3), GeForce GT 920MQuadro K5000, Quadro K4200, Quadro K4000, Quadro K2000, Quadro K2000D, Quadro K600, Quadro K420,

Quadro K500M, Quadro K510M, Quadro K610M, Quadro K1000M, Quadro K2000M, Quadro K1100M, Quadro K2100M, Quadro K3000M, Quadro K3100M, Quadro K4000M, Quadro K5000M, Quadro K4100M, Quadro K5100M,

NVS 510, Quadro 410

Quadro K6000, Quadro K5200

Tesla K10, GRID K340, GRID K520, GRID K2

Tesla K40, Tesla K20x, Tesla K20

For your GT710 you need to install CUDA 10.2 and use Object Detection (YOLOv5 3.1) moduleMikeLud1; Did anything ever come of this testing for module(s) for older Nvidia GPUs?

I've been using a GT710, a Kepler based gpu, and I actually couldn't get it to work with Deepstack. The error indicated that the Pytorch version didn't support a card that old. It seems you would have to use CUDA 8.0 or something like that.

I've been trying version 2.6.0 several times, and the Yolo 5.6.2 is extremely slow, like 3 seconds or more for detection times. (CPU)

One time, I was able to get the Intel GPU to work, it was a little slower than version 1.6.8, like 500 to 700 msec.

Now I have gone back to Deepstack on my Nvidia Jetson. (Ipcam-combined or Ipcam-dark, getting 150 to 300 msec)

Just wondering if there was anything that was found about these older GPUs.

OK, I have been very successfully running the CPAI version 1.6.7 for a few months now on a "CPU only" (i5-8500) system, but am a bit hesitant to upgrade to the newer 2.0.x versions, partly due to my general confusion over which modules I would want to run. My current CPAI only loads the "Object Detection (YOLO)" module, with no further refinement of Version/Revision number. Now I see that we have several choices, even for CPU only systems (or those with integrated Intel Graphics), but how do we know which one to choose? Despite reading every post in all the relevant threads, I see no clear instructions for direction on this point.For your GT710 you need to install CUDA 10.2 and use Object Detection (YOLOv5 3.1) module

CUDA 10.2 link

CUDA Toolkit 10.2 Download

Get CUDA Toolkit 10.2 for Windows, Linux, and Mac OSX.developer.nvidia.com

View attachment 152067

For you Integrated Intel GPU I would try Object Detection (YOLOv5 .NET) and compare with Object Detection (YOLOv5 6.2) and see which one performs better, it should be Detection (YOLOv5 .NET)OK, I have been very successfully running the CPAI version 1.6.7 for a few months now on a "CPU only" (i5-8500) system, but am a bit hesitant to upgrade to the newer 2.0.x versions, partly due to my general confusion over which modules I would want to run. My current CPAI only loads the "Object Detection (YOLO)" module, with no further refinement of Version/Revision number. Now I see that we have several choices, even for CPU only systems (or those with integrated Intel Graphics), but how do we know which one to choose? Despite reading every post in all the relevant threads, I see no clear instructions for direction on this point.

Also, I see some users are seeing slower analysis times compared to the 1.6.x version of CPAI: has this been resolved yet? I am usually one to jump into Beta versions fairly quickly, but this upgrade gave me pause for several reasons. Advice welcome...

Thanks Mike; I'll give it a try.For your GT710 you need to install CUDA 10.2 and use Object Detection (YOLOv5 3.1) module

CUDA 10.2 link

CUDA Toolkit 10.2 Download

Get CUDA Toolkit 10.2 for Windows, Linux, and Mac OSX.developer.nvidia.com

View attachment 152067

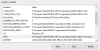

No joy so far.You need to install all of the below

View attachment 152137

Also, nothing works in the CPAI Explorer page for Object Detection or Custom Object Detection.No joy so far.

Getting the following in the CPAI log:

2023-01-27 12:12:59: Object Detection (YOLOv5 3.1): Detecting using ipcam-combined in Object Detection (YOLOv5 3.1)

2023-01-27 12:12:59: Object Detection (YOLOv5 3.1): Unable to load model at C:\Program Files\CodeProject\AI\modules\YOLOv5-3.1\custom-models\ipcam-combined.pt (CUDA error: no kernel image is available for execution on the device) in Object Detection (YOLOv5 3.1)

You can open a command prompt and run nvidia-smi and this will show the active CUDAAlso, nothing works in the CPAI Explorer page for Object Detection or Custom Object Detection.

View attachment 152145

I will check and see if I have some pieces of CUDA 11 on the machine...

I am not sure what is happening can you post all details on the issue you are having on CodeProject.AI (link is below), they are very responsive and will look to fix it.I downloaded CodeProject and the CPAI Explorer page for Object Detection or Custom Object Detection works for a minute and then it keeps popping up with a server connection error. I haven't gone any further with trying to incorporate into BI as I assume if this isn't working, then it won't work BI?

14:21:39: detect_adapter.py: Server connection error. Is the server URL correct?

14:21:39: detect_adapter.py: Pausing on error for 60 secs.

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: Pausing on error for 60 secs.

14:21:39: detect_adapter.py: Pausing on error for 60 secs.

14:21:39: detect_adapter.py: Server connection error. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: Server connection error. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

Yep. 11.4. Working on getting rid of the different version.You can open a command prompt and run nvidia-smi and this will show the active CUDA

View attachment 152168

Odd. This is what I see.Yep. 11.4. Working on getting rid of the different version.

Try the below stepsOdd. This is what I see.

View attachment 152171

I might need to dig through the registry.

I did this before to get rid of all traces of CUDA, I was having a similar error problem trying to run Deepstack in Docker in WSL.

As I recall, I had to look and look and delete everything I found until the error went away.

I keep going back to Deepstack, unfortunatly by changing the CUDA checkbox on the AI tab, that broke BI for a few hours.

Rebuilt the database, everything is working now.

Staying with Deepstack on the Nano. I could roll back to CPAI 1.6.8. That one worked well for about 6 weeks.

I might also hold off until the versions do not end with "beta".

I downloaded CodeProject and the CPAI Explorer page for Object Detection or Custom Object Detection works for a minute and then it keeps popping up with a server connection error. I haven't gone any further with trying to incorporate into BI as I assume if this isn't working, then it won't work BI?

14:21:39: detect_adapter.py: Server connection error. Is the server URL correct?

14:21:39: detect_adapter.py: Pausing on error for 60 secs.

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: Pausing on error for 60 secs.

14:21:39: detect_adapter.py: Pausing on error for 60 secs.

14:21:39: detect_adapter.py: Server connection error. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

14:21:39: detect_adapter.py: Server connection error. Is the server URL correct?

14:21:39: detect_adapter.py: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?objectdetection_queue: [ClientConnectorError] : Unable to check the command queue objectdetection_queue. Is the server URL correct?

I am not sure what is happening can you post all details on the issue you are having on CodeProject.AI (link is below), they are very responsive and will look to fix it.

CodeProject.AI Discussions - CodeProject

www.codeproject.com

OK, I guess I have OCD about this. I keep saying I'm going to give up on this and just let the Jetson run it.Try the below steps

- remove CUDA 10.2

- delete all the CUDA folders

- remove all the CUDA paths

- reboot

- install CUDA 10.2

View attachment 152180

View attachment 152181

OK, here is where I am.OK, I guess I have OCD about this. I keep saying I'm going to give up on this and just let the Jetson run it.

I'm reading that nvidia-smi will show what version of CUDA the Nvidia driver will support.

The command nvcc --version will show the current CUDA version installed.

Reference:

Different CUDA versions shown by nvcc and NVIDIA-smi

I am very confused by the different CUDA versions shown by running which nvcc and nvidia-smi. I have both cuda9.2 and cuda10 installed on my ubuntu 16.04. Now I set the PATH to point to cuda9.2. So...stackoverflow.com

"I think I've seen this exact question come up multiple times over the last couple days. But I can't seem to find a duplicate now. The answer is: nvidia-smi shows you the CUDA version that your driver supports."

Like this:

Microsoft Windows [Version 10.0.19045.2486]

(c) Microsoft Corporation. All rights reserved.

C:\Users\ftsadmin>nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2019 NVIDIA Corporation

Built on Wed_Oct_23_19:32:27_Pacific_Daylight_Time_2019

Cuda compilation tools, release 10.2, V10.2.89

C:\Users\ftsadmin>nvidia-smi

Sat Jan 28 07:52:08 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 473.81 Driver Version: 473.81 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... WDDM | 00000000:01:00.0 N/A | N/A |

| 50% 0C P0 N/A / N/A | 572MiB / 2048MiB | N/A Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

No running processes because I have CPAI service disabled

I have also checked all the paths for CUDA in user and system, they all point to CUDA 10.2, so that should be ok.

View attachment 152280

I'm going to check the driver version for CUDA 10.2 and install that Nvidia driver and see what that does.

I might also try to install the latest version of Blue Iris; reluctantly, because I have been sticking with critical or highly stable updates for the last year, I get fewer problems that way.