IAmATeaf

Known around here

I doubt it. I assume it works with 5, 10, 15 or20 fps but perhaps only with a key frame interval of precisely 1s.

That’s very true, had a brain fart moment and forgot all about that.

I doubt it. I assume it works with 5, 10, 15 or20 fps but perhaps only with a key frame interval of precisely 1s.

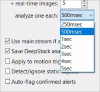

Could be that the moment where a fast moving car was in view happens to fall in the 1 second interval between two DeepStack sends. The DS analyzer wouldn’t pick up on this coincidence because I don’t think it sends the exact frames it sent during the original incident.Thinking about this, I’ve realised that the requirement of waiting for a key frame to arrive from the camera before an image can be sent to DeepStack and at one second intervals is not valid. When recording continuously, Blue Iris already has memorised the preceding key frame. Prior to DeepStack, complete alert images have always been captured in Bl the instant they “turned red”, regardless of whenever key frames occur.

So this means that I no longer understand why DeepStack cancels many of the the cars that are only in view for roughly one second when BI has captured one perfect image immediately following the pick-up time.

Can anyone please explain?

I can see that deepstack.cc guide to install Docker on windows and Deepstack as docker (Opposite to guide shared later in their docs)

Is it the better way with Docker ?

you found solution for this?Anyone have a way to actually get these confirmed alert images (snapshots only, not the clip) backed up somewhere? The alerts' "FTP image upload" option doesn't actually use the alert image. All other options seem to include non-confirmed alerts as well.

I wrote a script to copy the alert image elsewhere when certain AI objects are detected, like faces. Using the &ALERT_PATH macro (which is not even a path, it’s just a filename). It works most of the time, but BI craps out every now and then.you found solution for this?

would you mind share your script?I wrote a script to copy the alert image elsewhere when certain AI objects are detected, like faces. Using the &ALERT_PATH macro (which is not even a path, it’s just a filename). It works most of the time, but BI craps out every now and then.