Wow! Thanks, that is a big difference in time, but also a big difference in hardware. I don't think I can get my personal finance department to spring for that kind of hardware yetIn my limited experience with the Windows version of Deepstack, I average around 600ms. Thats on a Threadripper 1950x with 16 cores, 64 GB ram and Samsung M2 drives that are like 3000mb read speed. If you had less than 16 GB ram that appears like it could be a factor since I'm seeing over 8GB ram usage in all the deepstack processes. And I'm not 100% sure but I think it tries to use all of my cores, so that certainly is a factor. I could never get it to work, but if you have a good nvidia card you could try the "GPU" version in Docker.

[tool] [tutorial] Free AI Person Detection for Blue Iris

- Thread starter GentlePumpkin

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Chris Dodge

Pulling my weight

I tried out the VorlonCD/bi-aidection fork of AI Tool that was posted by Chris Dodge and wow, there's a lot of nice improvements. I especially like the ability for AI Tool to automatically start DeepStack in Windows and not have to deal with the DeepStack console that can't be closed or minimized. This feature makes running DeepStack on the same computer that runs Blue Iris usable for me. My BI computer has a couple of monitors attached that constantly display the UI3 web interface. I could not use DeepStack in Windows without the console covering up a large part of one monitor.

The fork is up and running on my BI computer and it's mostly doing what I want, but there is one issue that I have not been able to resolve. When the camera sees motion it drops an image into D:\BlueIris\aiinput. AI Tool detects the new file in the folder and sends it to DeepStack where it's processed, the results are returned, and BI Tool handles it accordingly. The problem is that BI Tool then sends the same image to DeepStack again. Currently AI Tool is sending a single image to DeepStack twice, but at one time with a different install it was sending every image three times. Everything is working but DeepStack is having to do two to three times the amount of work that it should. I have checked the D:\BlueIris\aiinput folder to be sure that it does not contain duplicate images, and it does not.

While troubleshooting I found that removing the trigger URL stops the duplicate processing. However, duplicate processing is performed even if the image is considered irrelevant and the camera is not triggered. Here's the full trigger line. It is the default URL that is automatically filled in, but I have changed it to HTTP and port 81, as also added my credentials.

Try removing Settings tab > Input path - While duplicate paths SHOULD be filtered out by default maybe there is a bug. Just leave the input paths for each Camera

Chris Dodge

Pulling my weight

I've noticed a new issue with the Vorlon fork that appears to be randomly deleting images and entries from the log file way too soon. For some reason, it is deleting the files only a few minutes after they were captured, and then they either do not show up in the history list, or if they are still in the list, you get an unhandled exception error when clicking on the entry. Here is some of the log file - note the time stamps:

Try closing the app and deleting \Cameras\history.csv. I think if it gets corrupt it behaves like that. Also I think you might have to delete that file the first time after upgrading to my version anyway.

Thank you! That makes sense. I found one of my cameras was generating a flood of images - motion detector on the clone was set way too sensitive. Fixed that and now Thread queue time is now down to 0 most of the time, but Deepstack is still running in the 1500-2300ms range. I am running this on i7-7700K at 4.2GHz with a Toshiba RD400 SSD. Is there a way to dig into Deepstack and see exactly where the delay is coming in? Anything that could help it short of the hardware change? What is an average good time that I should be striving for?

Thanks!

I don't know if my recent experience might help you. I am running BI 5, AI Tool, and DeepstackAI on Windows 10 with an Intel i5-4670, 3.4 GHz, 16 GB RAM. I am running 11 cameras - 6 @ .9 megapixel, 3 @ 2 MP, 2 @ 5 MP, all at 10 FPS with substreams. There are also several cloned camras but I don't think those affect resources too much. I'm using Intel hardware acceleration (I think Intel + VPP on most cameras). Blue Iris reports a total of about 168 MP/s and 4100 Kb/s.

Before enabling substreams I was seeing about 2 second per image in Deepstack and about 30% CPU. After enabling substreams my CPU usage dropped closer to 20%. Deepstack also got a little faster, I assume because Blue Iris was using less CPU. It was running closer to 1 second per image, sometimes a little more, sometimes a little less. I tried using the high/medium/low options when starting Deepstack but never noticed much of a difference in the image processing time at any setting.

I decided to try using Docker Desktop for Windows to run Deepstack. The most profound change in doing this is that there is a huge difference between starting Deepstack with the mode set to high, medium, or low. When running at high it was taking about 2 second per image. When running at medium it was close to 1 second per image. Running at low I am consistently getting 700ms to 900ms image processing times. I don't know if the Windows GUI startup for Deepstack is ignoring the high/medium/low setting or if something else is going on, but the fastest response on my system is definitely running under Docker with Mode=Low.

I had set the time between BI writing JPEGs to try to avoid a backlog in Deepstack, so originally I only wrote one image every 2 seconds. I am masking all but a small area near my front door so I would sometimes miss alerts if the person moved in and out of the unmasked area fast enough. After switching to substreams I was able to set BI to write a JPEG every 1.3 second. After switching to Deepstack in Docker with Mode=Low I am now able to write a JPEG every second without a backlog and could probably bring that down to .9 seconds without any problem.

Currently with Deepstack in Docker I'm seeing about 23% total CPU with occasional spikes as high as 40%. Blue Iris is using about 15% of that. The attached image shows the CPU spike when I triggered my camera to write 4 JPEG images two times in a row. CPU jumps to about 80% to 90% total while processing the images.

Also I tried changing the JPEG size from the 5MP main stream image to the VGA substream image and didn't notice a huge difference in response time from Deepstack, so I put it back to the larger size.

Attachments

Has anyone seen the camera taking a long... time to save the record snapshot image to the AiInput folder?

I'm running 4 camera's (all 4 megapixels or less and hard wired with cat5e) and all of them when triggered record the event within 3 seconds except 1. The issue with the 1 camera is it's recording starts about 20 to 50 seconds later on some captures and others are within 3 seconds after the trigger. I believe this is caused by the camera image not being saved to the AiInput folder quickly as an example the image is taken at 10:50:16am and the recording starts at 10:50:37am which is also roughly the time the file is created in the AiInput folder. I tested the network run with a cable tester and iperf and everything looks good so that leads me to could it be a issue with the camera itself? I've tested manually triggering the camera in BlueIris and still get the delay so seems to be the saving of the file to the BlueIris server. Anyone had any experience with an issue like this? Thanks!

Camera

Hikvision DS-2CD2132-I

V5.2.0 build 140721

I'm running 4 camera's (all 4 megapixels or less and hard wired with cat5e) and all of them when triggered record the event within 3 seconds except 1. The issue with the 1 camera is it's recording starts about 20 to 50 seconds later on some captures and others are within 3 seconds after the trigger. I believe this is caused by the camera image not being saved to the AiInput folder quickly as an example the image is taken at 10:50:16am and the recording starts at 10:50:37am which is also roughly the time the file is created in the AiInput folder. I tested the network run with a cable tester and iperf and everything looks good so that leads me to could it be a issue with the camera itself? I've tested manually triggering the camera in BlueIris and still get the delay so seems to be the saving of the file to the BlueIris server. Anyone had any experience with an issue like this? Thanks!

Camera

Hikvision DS-2CD2132-I

V5.2.0 build 140721

Chris Dodge

Pulling my weight

Has anyone seen the camera taking a long... time to save the record snapshot image to the AiInput folder?

I'm running 4 camera's (all 4 megapixels or less and hard wired with cat5e) and all of them when triggered record the event within 3 seconds except 1. The issue with the 1 camera is it's recording starts about 20 to 50 seconds later on some captures and others are within 3 seconds after the trigger. I believe this is caused by the camera image not being saved to the AiInput folder quickly as an example the image is taken at 10:50:16am and the recording starts at 10:50:37am which is also roughly the time the file is created in the AiInput folder. I tested the network run with a cable tester and iperf and everything looks good so that leads me to could it be a issue with the camera itself? I've tested manually triggering the camera in BlueIris and still get the delay so seems to be the saving of the file to the BlueIris server. Anyone had any experience with an issue like this? Thanks!

Camera

Hikvision DS-2CD2132-I

V5.2.0 build 140721

A few thoughts: Make sure that the AI cameras have Record > "Only when triggered" checked. The AIINPUT folder really should be a fast solid state drive. M.2 drive is ideal if motherboard supports or you can get an addin card for it. You reallllllllly dont want to be using a mechanical drive for this. Make sure the AIINPUT folder is set to delete files before 1000's build up since that in itself can make file access slower. Otherwise, maybe hard drive going bad. In admin command prompt, run "CHKDSK /F /R X:" where x is the drive in question. This will take a few hours and may ask you to take the drive offline or reboot in order to do the scan. If cannot avoid mechnical drive, clean it out and do a defrag. Otherwise, try increasing BI > Camera (original, not AI) > Record > pre-trigger video buffer up to 15+ secs if all else fails.

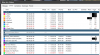

I went in and tried setting Deepstack MODE=Low, and anecdotally, it seems to have shaved a second off each image processing time. Curious? I believe I have my substream set up correctly. You can see here that the AI_sd cameras show no Bitrate, so I am assuming that means they are properly cloning off the main streams seen below.I don't know if my recent experience might help you. I am running BI 5, AI Tool, and DeepstackAI on Windows 10 with an Intel i5-4670, 3.4 GHz, 16 GB RAM. I am running 11 cameras - 6 @ .9 megapixel, 3 @ 2 MP, 2 @ 5 MP, all at 10 FPS with substreams. There are also several cloned camras but I don't think those affect resources too much. I'm using Intel hardware acceleration (I think Intel + VPP on most cameras). Blue Iris reports a total of about 168 MP/s and 4100 Kb/s.

Before enabling substreams I was seeing about 2 second per image in Deepstack and about 30% CPU. After enabling substreams my CPU usage dropped closer to 20%. Deepstack also got a little faster, I assume because Blue Iris was using less CPU. It was running closer to 1 second per image, sometimes a little more, sometimes a little less. I tried using the high/medium/low options when starting Deepstack but never noticed much of a difference in the image processing time at any setting.

I decided to try using Docker Desktop for Windows to run Deepstack. The most profound change in doing this is that there is a huge difference between starting Deepstack with the mode set to high, medium, or low. When running at high it was taking about 2 second per image. When running at medium it was close to 1 second per image. Running at low I am consistently getting 700ms to 900ms image processing times. I don't know if the Windows GUI startup for Deepstack is ignoring the high/medium/low setting or if something else is going on, but the fastest response on my system is definitely running under Docker with Mode=Low.

I had set the time between BI writing JPEGs to try to avoid a backlog in Deepstack, so originally I only wrote one image every 2 seconds. I am masking all but a small area near my front door so I would sometimes miss alerts if the person moved in and out of the unmasked area fast enough. After switching to substreams I was able to set BI to write a JPEG every 1.3 second. After switching to Deepstack in Docker with Mode=Low I am now able to write a JPEG every second without a backlog and could probably bring that down to .9 seconds without any problem.

Currently with Deepstack in Docker I'm seeing about 23% total CPU with occasional spikes as high as 40%. Blue Iris is using about 15% of that. The attached image shows the CPU spike when I triggered my camera to write 4 JPEG images two times in a row. CPU jumps to about 80% to 90% total while processing the images.

Also I tried changing the JPEG size from the 5MP main stream image to the VGA substream image and didn't notice a huge difference in response time from Deepstack, so I put it back to the larger size.

I have my AI cameras set to record a snapshot every 4 seconds when triggered. I have not resized the AI cameras either. I might try that and see if it makes any difference on my install.

Thanks for the tips!

John

Thanks Chris. Tried deleting and restarting, but it is still deleting the files and history. The triggers seem to be working, so I do have the clips in BI, but I can't go back in the AITool and look at what triggered the event later. Strange. Let me know if there is something else I can try to troubleshoot.Try closing the app and deleting \Cameras\history.csv. I think if it gets corrupt it behaves like that. Also I think you might have to delete that file the first time after upgrading to my version anyway.

Thanks for all your help!

I went in and tried setting Deepstack MODE=Low, and anecdotally, it seems to have shaved a second off each image processing time. Curious? I believe I have my substream set up correctly. You can see here that the AI_sd cameras show no Bitrate, so I am assuming that means they are properly cloning off the main streams seen below.

View attachment 68978

I have my AI cameras set to record a snapshot every 4 seconds when triggered. I have not resized the AI cameras either. I might try that and see if it makes any difference on my install.

Thanks for the tips!

John

When you say you have your substreams set up I think you mean you cloned cameras. The cloned cameras don't show a bitrate because there is no additional network load for the clones. However you don't have anything showing under Sub FPS and Sub Bitrate. That is where you'd see the stats for substreams.

You might want to consider dropping everything to 10 or 15 frames per second from the 20 and 30 FPS rates you're currently using. Unless you have a very specific need for smoother video you're just pulling more network and processor resources without much gain. I run everything at 10 FPS with a key frame every 20 frames.

As you can see in the attached image my Front_Camera is 5 MP, and the main stream is 471 kB/s at 10 FPS, but the substream that is being analyzed for motion is only 71 kB/s also at 10 FPS. Your 5.3 MP Front Yard camera is running 715 kB/s at 20 FPS (35% less bandwidth on mine). Your 2.1 MP Front Door is running 478 kB/s at 30 FPS. My 2.1 MP LivingRoom camera is running 140 kB/s at 10 FPS (29% of the network bandwidth of yours).

If you are happy with the performance you're seeing you don't need to change anything, but if you want to speed things up you have a lot of room to reduce resource usage.

Attachments

Wow! Thanks, that is a big difference in time, but also a big difference in hardware. I don't think I can get my personal finance department to spring for that kind of hardware yetI will take a look at the GPU version, but I am going to say my video card is not going to be up to it either, as it is not too powerful.

Here are my response times (docker running with the LOW attribute for DeepstackAi). Total bitrate for my cameras in BI is 8300 kB/s

[GIN] 2020/08/20 - 06:56:36 | 200 | 788.594562ms | 192.168.0.179 | POST /v1/vision/detection

[GIN] 2020/08/20 - 06:56:37 | 200 | 914.015632ms | 192.168.0.179 | POST /v1/vision/detection

[GIN] 2020/08/20 - 06:56:38 | 200 | 811.654492ms | 192.168.0.179 | POST /v1/vision/detection

[GIN] 2020/08/20 - 06:56:40 | 200 | 838.544016ms | 192.168.0.179 | POST /v1/vision/detection

[GIN] 2020/08/20 - 06:56:41 | 200 | 768.397834ms | 192.168.0.179 | POST /v1/vision/detection

This is on a Threadripper 2950x, 128GB of RAM. OS is Proxmox where I run a whole bunch of virtual servers (20+ virtual servers - Windows and Linux).

More info here on my setup - New AMD Ryzen

Since the post in the thread mentioned above I have also added my real desktop to this environment (Win10 with AMD 5700 XT, USB and audio card all passthrough from ProxMox to the virtual Win10). I get around 98% of bare metal performance.

So given all the other stuff I am running on my ProxMox host I am very happy with the response time from DeepStack.

robpur

Getting comfortable

Try removing Settings tab > Input path - While duplicate paths SHOULD be filtered out by default maybe there is a bug. Just leave the input paths for each Camera

Thanks for the suggestion, it fixed the problem. Also, thanks for all your hard work to make AI Tool better!

Thank you for the suggestions. I'm running on a SSD right now and keep the AiInput under 1GB or 5 hours. The odd thing is it's only this one camera and the others are fine so to me it has to be the camera, cable run or port on the switch. Easy to run a new cable and try a different switch port but not ready to spend the $ on a new camera yet.A few thoughts: Make sure that the AI cameras have Record > "Only when triggered" checked. The AIINPUT folder really should be a fast solid state drive. M.2 drive is ideal if motherboard supports or you can get an addin card for it. You reallllllllly dont want to be using a mechanical drive for this. Make sure the AIINPUT folder is set to delete files before 1000's build up since that in itself can make file access slower. Otherwise, maybe hard drive going bad. In admin command prompt, run "CHKDSK /F /R X:" where x is the drive in question. This will take a few hours and may ask you to take the drive offline or reboot in order to do the scan. If cannot avoid mechnical drive, clean it out and do a defrag. Otherwise, try increasing BI > Camera (original, not AI) > Record > pre-trigger video buffer up to 15+ secs if all else fails.

Chris Dodge

Pulling my weight

Thank you for the suggestions. I'm running on a SSD right now and keep the AiInput under 1GB or 5 hours. The odd thing is it's only this one camera and the others are fine so to me it has to be the camera, cable run or port on the switch. Easy to run a new cable and try a different switch port but not ready to spend the $ on a new camera yet.

If you can watch the cameras stream with only a few seconds delay for live events, and the stream is pretty constant, then I wouldnt think its the camera or wiring.

pmcross

Pulling my weight

I am currently running Deepstack in Docker on Windows and it is performing fairly well in regards to CPU consumption with the exception of certain times when the CPU is maxed out due to images with human activity (at the same time) in the front and back of my house, which doesn't happen frequently. Nevertheless, I am planning on buying another PC to run Deepstack solely to separate it from my BI machine. Before doing this I was looking into the Coral USB Accelerator and I see that you are/were using it. Did you see a decrease in host CPU consumption running the Coral USB Accelerator versus Deepstack?This is particularly hard, because e.g. for objects further away something is detected in frame 1, but not anymore in frame 10 (noise, lighting conditions, ...). If you want something like this, you would defintely need object tracking. I saw this with my Google Coral implementation too (and am working on object tracking).

You also need object tracking for e.g. moving shadows due to trees. It will keep on detecting your cars if you do not track them.

This means you would have to throw images to the AI tool at a much higher frequency (even when no motion detected)

Anyone decent enough to update the tool (until OP can) and add an option for a negative flag by the trigger one for BI? So basically right now it triggers when a match but with BI having the flag option you can also have it send 0 instead of 1 which will cancel an alert. So basically regardless have the AI send a yes or no response. This would remove the need for camera clones for alerts at least because it would be able to cancel false positives using the BI built in wait period you can define to allow alert canceles. As-is this ends up needing clone from what I can tell.

IAmATeaf

Known around here

Anyone decent enough to update the tool (until OP can) and add an option for a negative flag by the trigger one for BI? So basically right now it triggers when a match but with BI having the flag option you can also have it send 0 instead of 1 which will cancel an alert. So basically regardless have the AI send a yes or no response. This would remove the need for camera clones for alerts at least because it would be able to cancel false positives using the BI built in wait period you can define to allow alert canceles. As-is this ends up needing clone from what I can tell.

That makes no sense to me? AITools triggers the cam if DQ reports something of interest. When would you want to send a trigger to cancel?

Because I don't need the camera triggered. I need to get an alert or not.That makes no sense to me? AITools triggers the cam if DQ reports something of interest. When would you want to send a trigger to cancel?

My cameras record 24/7 so I have nothing to trigger. I simply want notification valid motion was detected.

I want to use the native BI settings which allow for an alert to be canceled if it's invalid.

This is what Sentry does, confirms or rejects an alert and that's how you don't require to use clones to get alerts otherwise.

As-is I must use clones because I have to use camera 1 to capture "alerts" which AITool uses. If valid I now have to send a trigger to a clone camera 2 to capture another image to send the actual alert because I can't use camera 1 for notifications since it's capturing everything junk or valid.

If I could send the flag=0 command that BI supports and what it was created for, I can use the same camera for both. It confirms if valid, rejects if not and the actual alerts are correct in BI now without needing separate clones/cameras or other complexity.

pmcross

Pulling my weight

Because I don't need the camera triggered. I need to get an alert or not.

My cameras record 24/7 so I have nothing to trigger. I simply want notification valid motion was detected.

I want to use the native BI settings which allow for an alert to be canceled if it's invalid.

This is what Sentry does, confirms or rejects an alert and that's how you don't require to use clones to get alerts otherwise.

As-is I must use clones because I have to use camera 1 to capture "alerts" which AITool uses. If valid I now have to send a trigger to a clone camera 2 to capture another image to send the actual alert because I can't use camera 1 for notifications since it's capturing everything junk or valid.

If I could send the flag=0 command that BI supports and what it was created for, I can use the same camera for both. It confirms if valid, rejects if not and the actual alerts are correct in BI now without needing separate clones/cameras or other complexity.

I believe that you have an additional clone that you don’t need. I have one clone for each camera. The main records 24/7 like yours. I then have motion detection enabled only on the clones. AI Tool then triggers my main camera(s) if an object meets the criteria. I like your thinking with the 0 or 1 for a valid alert, but I don’t fully understand because a AI Tool wouldn’t trigger a camera if the event didn’t contain a valid object. If you don’t want to use clones you can flag valid alerts from AI Tool and then filter the app or web interface to only show flagged alerts. This is the way that Sentry does it. The major disadvantage of not using clones is that the alerts view aren’t as neat due to any motion event showing and not just valid alerts.

Sent from my iPhone using Tapatalk

OccultMonk

Young grasshopper

Last time I tried GPU (a few weeks ago) it worked well, but stopped working after 30 min or an hour or so. I read others had the same problem. Has the GPU version been improved, or does it still stop working after some time?