So, there is something I'm not understanding. If you don't want a video taken at all then just setup your areas not to start recording if it doesn't meet your criteria. That is, your area will only start recording if you want a person and it is a person. Are you saying that you do want the video recorded, but you don't want an alert? Right now, On Guard just says "do you want a recording or don't you".haha, it was hard to type it out. Basically the flow is like this (I am using a single camera):

1. Camera detects motion

2. Camera take picture

3. AI analyzes picture

4. AI determines person (or animal, or car, whatever)

5. AI sends URL for camera with &flagalert=1

6. Video captured by that camera gets flagged.

7. When I check my alerts on the mobile app I have it set to show me flagged alerts and I see this alert.

Other situation:

1. Camera detects motion

2. Camera take picture

3. AI analyzes picture

4. AI see no object, or object were not looking for (sees a car, but were only watching for a person)

5. AI sends URL for camera with &flagalert=0

6. Video captured by camera does not get flagged, but gets marked "Cancelled"

7. In mobile app, video does not show under flagged alerts so therefore i dont see it. It will show up under the "Cancelled Alerts" filter though

Maybe that flow makes it easier. Either way, I would completely switch over to this if we could send cancels over. The ability to watch for certain things in certain parts of the cameras frame is way more powerful than what I am using now.

Yet Another Free Extension for Blue Iris Adding AI Object Dectection/Reduction in False Alarms/Enhanced Notification of Activity/On Guard

- Thread starter Ken98045

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It does (as of the last 5 days) run in Windows in GPU mode, but it isn't in a standard Windows window (that is, it runs in a command line/Power Shell window). I can live with that. You do not need any kind of Linux setup on Windows. Note that this is a Beta version. However, I have not run into any problems after processing 20,000 or so pictures (we had wind followed by snow so there was a lot of garbage pictures).Deepstack GPU does not run on windows boxes at the moment. It may sometime in the new year but for now you would need to run a linux box to get gpu support.

So, there is something I'm not understanding. If you don't want a video taken at all then just setup your areas not to start recording if it doesn't meet your criteria. That is, your area will only start recording if you want a person and it is a person. Are you saying that you do want the video recorded, but you don't want an alert? Right now, On Guard just says "do you want a recording or don't you".

So I think it all depends on that I am using a single camera setup. In order to get pictures to send to OnGuard I need to set the motion sensor up to grab a picture. But what also happens is that if I want video recorded the record tab has to say to record when triggered. Because there is no option to only record from an external trigger.

OK, I'm still not understanding completely. If you setup Blue Iris and On Guard properly you will only get a recording at all when all the matching conditions are met. That is, it is a person and you want a person. You setup a dummy, usually hidden camera that is the AI processor that takes snapshots. You setup the "real video" camera to record on an external trigger. There is really zero overhead for this according to Blue Iris. I'm not sure why you wouldn't want that setup.So I think it all depends on that I am using a single camera setup. In order to get pictures to send to OnGuard I need to set the motion sensor up to grab a picture. But what also happens is that if I want video recorded the record tab has to say to record when triggered. Because there is no option to only record from an external trigger.

Sorry if I'm being dense. I just don't quite understand the "real world" scenario you are trying to obtain.

OK, I'm still not understanding completely. If you setup Blue Iris and On Guard properly you will only get a recording at all when all the matching conditions are met. That is, it is a person and you want a person. You setup a dummy, usually hidden camera that is the AI processor that takes snapshots. You setup the "real video" camera to record on an external trigger. There is really zero overhead for this according to Blue Iris. I'm not sure why you wouldn't want that setup.

Sorry if I'm being dense. I just don't quite understand the "real world" scenario you are trying to obtain.

Nah it's all good. I'm weird and don't like having the extra stuff in my Blue Iris, even if I can hide it and there is no cost. With the recent addition of substream support in cameras, I brought everything down to a single camera with no clones.

SyconsciousAu

Getting comfortable

- Sep 13, 2015

- 870

- 826

Note that this is a Beta version.

What's the version number? I'll run a pull and take a look. I don't run a video card in my BI box at the moment, its headless so why bother, but I'll try it out and if I like I'll invest. What sort of processing times are you getting?

There is a link to the GitHub file location in one of the recent posts. They do have both a new Windows CPU and a Windows GPU version. I suspect the CPU version is faster as well.What's the version number? I'll run a pull and take a look. I don't run a video card in my BI box at the moment, its headless so why bother, but I'll try it out and if I like I'll invest. What sort of processing times are you getting?

My processing time with a recently purchased $300 GeForce 1660Ti (I think it is, and by the way graphics cards are way over-priced right now) is: At night using IR 200 to 250ms. During the day/in color about 250 - 450ms (averaging about 275 - 300). Keep in mind that the images I'm passing are 2560x1920, which is probably way more detail than is actually needed. I think at normal HD definitions it would be substantially faster. Also, I have about a 5 year old I7/8 core computer. I am also running 4 cameras through Blue Iris. The Blue Iris processing uses a bunch of CPU with that many cameras.

If you try the new CPU version please let me know what your speeds are before/after

UPDATE: Cutting down the .jpg images to 1280x1024 reduced the processing time (daylight) to 135 - 175ms with a rough average of 155ms.

Last edited:

SyconsciousAu

Getting comfortable

- Sep 13, 2015

- 870

- 826

If you try the new CPU version please let me know what your speeds are before/after

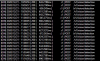

This is from last night, times are in UTC so add 11 hours for east coast of Australia, on the latest docker cpu version on an I7-8700. They are less than half what I was getting on the old windows version of deepstack. It's being fed 1920x1080 images. I've got a machine with a GTX1650Ti in it that I want to test the gpu version on and see how it goes before I invest in a graphics card. It's supposed to be heaps faster but as you point out graphics cards are overpriced and with sub 200ms processing times already, it would just be me chasing e-cool value. Your card seems to be a little better than mine so I'm not expecting much more from the GPU version.

[GIN] 2020/12/22 - 11:02:14 | 200 | 207.5302ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:06:03 | 200 | 184.1671ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:06:07 | 200 | 167.0831ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:13:03 | 200 | 189.9711ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:16:53 | 200 | 226.6581ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:16:57 | 200 | 145.5008ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:20:12 | 200 | 129.8768ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:26:49 | 200 | 139.3011ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:26:53 | 200 | 196.5173ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:33:38 | 200 | 133.7934ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:33:43 | 200 | 158.1181ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:44:07 | 200 | 155.9788ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:44:11 | 200 | 162.5683ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:44:15 | 200 | 139.0174ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:50:25 | 200 | 139.7377ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:57:47 | 200 | 153.1834ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:57:51 | 200 | 139.4095ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:58:04 | 200 | 135.6323ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:58:08 | 200 | 133.1505ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:58:47 | 200 | 137.5958ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 11:58:51 | 200 | 138.5963ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:10:25 | 200 | 145.9303ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:10:51 | 200 | 141.199ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:10:55 | 200 | 136.723ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:11:12 | 200 | 322.52ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:11:16 | 200 | 353.3401ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:30:34 | 200 | 298.3094ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:30:37 | 200 | 151.8819ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:35:02 | 200 | 142.4574ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:35:06 | 200 | 130.2423ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:54:34 | 200 | 157.3879ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:54:38 | 200 | 181.0042ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 12:54:42 | 200 | 143.771ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 13:09:56 | 200 | 149.8727ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 13:10:00 | 200 | 143.3962ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 14:06:20 | 200 | 148.3227ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 14:06:24 | 200 | 142.1804ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 14:06:28 | 200 | 145.1755ms | 172.17.0.1 | POST /v1/vision/detection

[GIN] 2020/12/22 - 14:29:45 | 200 | 149.5144ms | 172.17.0.1 | POST /v1/vision/detection

ah ok. thanks.Deepstack GPU does not run on windows boxes at the moment. It may sometime in the new year but for now you would need to run a linux box to get gpu support.

oh...a later reply via Ken that it does (for beta). I still gots a 4 x HDMI video card coming as need it to feed 2 or 3 smart tv's anyways.

SyconsciousAu

Getting comfortable

- Sep 13, 2015

- 870

- 826

oh...a later reply via Ken that it does (for beta).

That's not a docker variant, however I've found this

CUDA on WSL

NVIDIA Drivers for CUDA on WSL, including DirectML Support Through WSL2 and GPU paravirtualization technology, developers can run NVIDIA GPU accelerated Linux applications on Windows. The technology preview driver available here on developer zone includes support for CUDA and Direct ML. This...

Which may let me run the docker variant.

I'm getting terribly confused of what to do, what not to do.That's not a docker variant, however I've found this

CUDA on WSL

NVIDIA Drivers for CUDA on WSL, including DirectML Support Through WSL2 and GPU paravirtualization technology, developers can run NVIDIA GPU accelerated Linux applications on Windows. The technology preview driver available here on developer zone includes support for CUDA and Direct ML. This...developer.nvidia.com

Which may let me run the docker variant.

So...I'll do what I do best: start mashing things together til they fit and work

No, Don't Use CUDA on WSL -- This was just an experiment I tried. I didn't have any clue how soon they'd get the Windows variant out. I wanted to try GPU before that (so I could return my new card if necessary!)I'm getting terribly confused of what to do, what not to do.

So...I'll do what I do best: start mashing things together til they fit and work

Here is the Beta location: Release DeepStack Windows Version - Beta · johnolafenwa/DeepStack

As far as I can see (30,000 pictures later) it is very stable. I haven't "rated" the actual ability to recognize objects, but it seems fine.

ah ok gotcha. sorry, my brain has been fried a bunch in the last couple weeks with my personal home security issue.No, Don't Use CUDA on WSL -- This was just an experiment I tried. I didn't have any clue how soon they'd get the Windows variant out. I wanted to try GPU before that (so I could return my new card if necessary!)

Here is the Beta location: Release DeepStack Windows Version - Beta · johnolafenwa/DeepStack

As far as I can see (30,000 pictures later) it is very stable. I haven't "rated" the actual ability to recognize objects, but it seems fine.

I may end up keeping this 4 HDMI out card anyways as I have multiple HDMI tv's. Will see.

To help with the setup confusion...I am actually taking the time to read your OnGuard-Readme doc

Ah, interesting benchmarks!

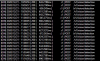

If you don't want to bother with GPU, it might be worth considering putting that same investment towards a CPU instead. I moved to an i7-10900K recently and am pretty impressed with the deepstack performance. Here are my numbers for 1536 x 2048 on the latest windows beta (version DeepStack-Installer-CPU.2020.12.beta.exe):

If you don't want to bother with GPU, it might be worth considering putting that same investment towards a CPU instead. I moved to an i7-10900K recently and am pretty impressed with the deepstack performance. Here are my numbers for 1536 x 2048 on the latest windows beta (version DeepStack-Installer-CPU.2020.12.beta.exe):

Code:

[GIN] 2020/12/22 - 15:33:43 |←[97;42m 200 ←[0m| 324.9982ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:44 |←[97;42m 200 ←[0m| 265.9744ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:44 |←[97;42m 200 ←[0m| 267.9993ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:45 |←[97;42m 200 ←[0m| 201.0007ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:46 |←[97;42m 200 ←[0m| 185.0009ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:47 |←[97;42m 200 ←[0m| 204.999ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:48 |←[97;42m 200 ←[0m| 194.9916ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:49 |←[97;42m 200 ←[0m| 186.0008ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:50 |←[97;42m 200 ←[0m| 198.0009ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:51 |←[97;42m 200 ←[0m| 187.9729ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:52 |←[97;42m 200 ←[0m| 187.9727ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:53 |←[97;42m 200 ←[0m| 193.9983ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:54 |←[97;42m 200 ←[0m| 200.9725ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:55 |←[97;42m 200 ←[0m| 208.9746ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:56 |←[97;42m 200 ←[0m| 207.9719ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:33:57 |←[97;42m 200 ←[0m| 191.0001ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:03 |←[97;42m 200 ←[0m| 196.9987ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:04 |←[97;42m 200 ←[0m| 195ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:05 |←[97;42m 200 ←[0m| 200.9993ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:06 |←[97;42m 200 ←[0m| 189.9995ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:07 |←[97;42m 200 ←[0m| 189.0007ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:08 |←[97;42m 200 ←[0m| 222.0005ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:09 |←[97;42m 200 ←[0m| 212.0911ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:10 |←[97;42m 200 ←[0m| 197.9993ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:11 |←[97;42m 200 ←[0m| 196.9997ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:12 |←[97;42m 200 ←[0m| 200.9999ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:13 |←[97;42m 200 ←[0m| 182.9995ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:14 |←[97;42m 200 ←[0m| 207.0007ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:15 |←[97;42m 200 ←[0m| 196.9709ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:16 |←[97;42m 200 ←[0m| 180.999ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:17 |←[97;42m 200 ←[0m| 198.998ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:18 |←[97;42m 200 ←[0m| 190.0002ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:19 |←[97;42m 200 ←[0m| 216.0001ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:20 |←[97;42m 200 ←[0m| 190.0009ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:21 |←[97;42m 200 ←[0m| 180.0011ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:22 |←[97;42m 200 ←[0m| 191.9992ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:23 |←[97;42m 200 ←[0m| 188.0006ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:24 |←[97;42m 200 ←[0m| 188.9994ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:35:25 |←[97;42m 200 ←[0m| 187.9996ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:42 |←[97;42m 200 ←[0m| 202.9927ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:43 |←[97;42m 200 ←[0m| 195.9997ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:44 |←[97;42m 200 ←[0m| 185.9887ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:45 |←[97;42m 200 ←[0m| 189.9738ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:46 |←[97;42m 200 ←[0m| 180.9731ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:47 |←[97;42m 200 ←[0m| 186.9989ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:48 |←[97;42m 200 ←[0m| 223ms | ::1 |←[97;46m POST ←[0m /v1/vision/detection

[GIN] 2020/12/22 - 15:36:49 |←[97;42m 200 ←[0m| 192.0003ms | ::1 |←[97;46m POST ←[0m /v1/vision/detectionThe no connection found at localhost, error. "The AI Detection process was not found at: "

I turned off Windows firewall just to make sure. Disabled bitdefender anti-virus just to make sure. Did put in the API, port 8090, detection API for Deepstack AI. grrr... I'll figure this out eventually I bet is a simple thing. Every time I hit "test DeepStack Connection", I get that [GIN] line in the DeepStack status window showing up. So that's something!

I bet is a simple thing. Every time I hit "test DeepStack Connection", I get that [GIN] line in the DeepStack status window showing up. So that's something!

I turned off Windows firewall just to make sure. Disabled bitdefender anti-virus just to make sure. Did put in the API, port 8090, detection API for Deepstack AI. grrr... I'll figure this out eventually

SyconsciousAu

Getting comfortable

- Sep 13, 2015

- 870

- 826

No, Don't Use CUDA on WSL -- This was just an experiment I tried.

Did it work?

The no connection found at localhost, error. "The AI Detection process was not found at: "

I turned off Windows firewall just to make sure. Disabled bitdefender anti-virus just to make sure. Did put in the API, port 8090, detection API for Deepstack AI. grrr... I'll figure this out eventuallyI bet is a simple thing. Every time I hit "test DeepStack Connection", I get that [GIN] line in the DeepStack status window showing up. So that's something!

View attachment 77577

You need to activate deepstack.

Go to 127.0.0.1:8090

DeepStack AI Server

www.deepstack.cc

www.deepstack.cc

and just to mention...still can connect from OnGuard to Deepstack with default Windows Firewall enabled and Bitdefender operational.

jz3082

Young grasshopper

I recently upgraded to the Windows Beta version of Deepstack. It is on an 17-6700. I am running 3-4MP using the main and substreams and a 2MP camera with the mainstream only. All cameras are running at 15FPS with continuous direct to disk recording of the camera mainstreams. This PC is also running HomeSeer, On Guard, and Deepstack. CPU usage is usually around 2% to 4%.

Prior to installing the Windows Beta Deepstack the images were processing in the 650 ms range.

After the Windows Beta install the images are processing in the 250ms range. The images are 1008X576 and are about 570KB each.

I have the clips posting to a PCIe NVME SSD. I am going to setup a RAM to help save the SSD and to see if this further improves image processing.

Prior to installing the Windows Beta Deepstack the images were processing in the 650 ms range.

After the Windows Beta install the images are processing in the 250ms range. The images are 1008X576 and are about 570KB each.

I have the clips posting to a PCIe NVME SSD. I am going to setup a RAM to help save the SSD and to see if this further improves image processing.