beepsilver

Getting comfortable

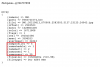

View attachment 98259

so you can see the 'dark'

also, you can see the DS time with GPU and the almost 20 secs to find a people that is why I have the 999 in plus images....

That was an amazon truck that triggered and took 19+ secs for the driver to exit and be visible.

What would have happened before is the people clone would motion trigger and keep recording due to the trucks movement and never see the person with 10-15 in the + images

I tried using 999 with several of my cams but I don't think my computer was up to the task...sent my CPU skyrocketing when two or more cameras triggered at the same time

Stuck using the CPU version of DS (no NVidia card).