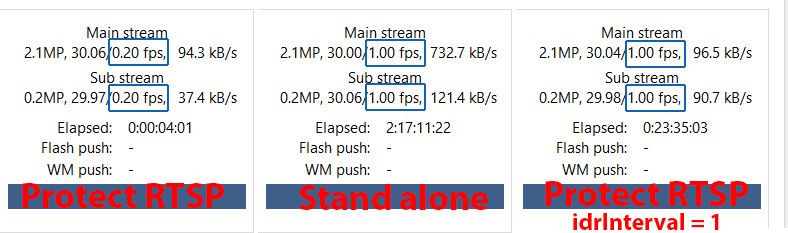

Yes thats correct however as mentioned they are using unique RTSP urls and THAT is where the stream is sourced, many NVR's work the same way and I think you're missing that this actually works and the problem has to do with keyframes as another member has mentioned and nothing at all to do with the same IP on cameras as I've already shown, its the RTSP url that makes them unique and they still work in

Blue Iris using this method.

If both cameras have the EXACT same IP and nothing after the port number then of course you have a clone of a camera, its using the same IP however what I am posting is actually a URL and forgive me for not adding rtsp:/ to the beginning of it but the forward slash further to the right in both the examples I show sort of make it clear this is the URL for the camera that makes it unique.

Here's further clarification if you are not sure of the difference between an IP and an rtsp URL, after port "7447" you can clearly see different characters which makes these unique rtsp urls where the stream is fed from for each camera, IP has nothing to do with this at all and you could have 50 rtsp urls all different and can still use them in BI even if the IP address and port is the same in every camera, its the characters after the forward slash that make each rtsp stream unique and NOT a clone.

Camera Office = rtsp:/192.168.1.120:7447/JJnG64KrxTHEzSCP

Camera Theatre= rtsp:/192.168.1.120:7447/MMtG64LryuKKzTTY